General FAQ¶

For questions about specific software such as Python, OpenOnDemand, or Custom Installations, visit Applications FAQ

Accounts and Investment¶

How do I obtain a HiPerGator account?

HPG accounts cannot be created by users, but can be requested with a valid sponsor's approval. Please submit a request via the account request form

How do I purchase HiPerGator resources or reinvest on expired allocations?

If you're a sponsor or account manager, please fill out a purchase form

How to add users to a group?

All users must submit a ticket via the RC Support Ticketing System with the Subject line in a format similar to "Add (username) to (groupname) group" in order to gain access to a given group.

What is HiPerGator-RV

HiPerGator-RV is a secure environment for research projects that use electronic Protected Health Information (ePHI), which are required to comply with the Health Insurance Portability and Accountability Act (HIPAA). ResVault can also be used for projects that require a “low” FISMA compliance rating, and for projects that require International Trade in Arms Regulation (ITAR) compliance.

These accounts are for our Restricted Computing users. They can be requested on the HiPerGator-RV Accounts page.

I can't login to my HPG account.

Login issues may be caused by one of the following reasons: - Improperly enting your password several times. - Incorrect password entered in STFP clients. - Misuse of resources on a cluster resulting in suspension of account.

Visit our Blocked Accounts page for more detailed information.

How do I change or recover my HiPerGator password?

UFIT Research Computing does not manage user credentials. GatorLink or Federated Authentication credentials are used throughout the system.

- For Gatorlink accounts, visit the GatorLink Account Management page for all Gatorlink account needs or contact UFIT Help Desk.

- For Federated accounts, use your local account management tools or contact your local support.

How do I get help with my HiPerGator account?

If you encounter problems or have questions, please open a support ticket. Support tickets provide a traceable, permanent record of your issue and are systematically reviewed on a daily basis to ensure they are addressed as quickly as possible.

How can I find out what allocations have expired or about to expire?

Please use the showAllocation tool in the 'ufrc' env module. See the UFRC Environment Module page for reference on all HPG tools.

What happens to my HiPerGator account when I leave UF?

The answer to this question depends mostly on how your affiliation with UF will change when you leave the university. If the new affiliation with UF does not allow for you to have an active GatorLink account, you will no longer be able to access HiPerGator.

If you will need to maintain access to your HiPerGator account after leaving UF, contact your group sponsor and request to be affiliated within their department as a “Departmental Associate.” This will ensure your GatorLink account, and therefore HiPerGator account, remain active after your previous affiliations are removed.

Of course, general UFIT Research Computing Account Policies are still in effect; any HiPerGator accounts that remain inactive for more than one year are automatically deactivated.

Job Management¶

What are the differences between batch jobs, interactive jobs, and GUI jobs?

A batch job is submitted to the batch system via a job script passed to the sbatch command. Once queued, a batch job will run on resources chosen by the scheduler. When a batch job runs, a user cannot interact with it. View a walk-through of an example batch job script

An interactive job is any process that is run at the command line prompt, generally used for developing code or testing job scripts. Interactive jobs should only be run in an interactive development session, which are requested through the srundev command. As soon as the necessary compute resources are available, the job scheduler will start the interactive session. View the Interactive Development & Testing documentation page

A GUI job uses HiPerGator compute resources to run an application, but displays the application’s graphical user interface (GUI) to the local client computer. GUI sessions are also managed by the job scheduler, but require additional software to be installed on the client side computer. View the GUI Programs documentation page

What are the wall time limits for each partition and QoS?

| Partition | Default Time Limit | Wall Time Limit |

|---|---|---|

| hpg-default | 10 minutes | 31 days |

| hpg-dev | 10 minutes | 12 hours |

| bigmem | 10 minutes | 31 days |

| hpg-milan | 10 minutes | 31 days |

| hpg-b200 | 10 minutes | 14 days |

| hpg-turin | 10 minutes | 14 days |

| hwgui | 10 minutes | 4 days |

| Compute partitions, investment QoS | 10 minutes | 31 days |

| Compute partitions, burst QoS | 10 minutes | 4 days |

How can I check how busy HiPerGator is?

Use the following command to view how busy the cluster is:

$ sinfo

How can I check my group's allocation use?

Use the following command to view how busy the cluster is:

$ slurmInfo

Scheduler¶

What is a batch system? / What is a job scheduler?

The purpose of a batch system is to execute a series of tasks in a computer program without user intervention (non-interactive jobs). The operation of each program is defined by a set or batch of inputs, submitted by users to the system as “job scripts.”

When job scripts are submitted to the system, the job scheduler determines how each job is queued. If there are no queued jobs of higher priority, the submitted job will run once the necessary compute resources become available. Please see our SLURM docs page for more information.

How do I submit a job to the batch system?

To submit a job, you first need to create a job script. This script contains the commands that the batch system will execute on your behalf. the primary job submission mechanism is via the sbatch command via the Linux command line interface

$ sbatch <your_job_script>

How do I check the status of my jobs?

You can easily check the status of your jobs using the Job Status utility. To navigate to the utility from the UFIT Research Computing website, use the Access menu header along the top of each page.

Alternatively, you can use the following command to check the status of the jobs you’ve submitted:

$ squeue -u <user_name>

-A flag:

$ squeue -A <group_name>

$ squeue -O jobarrayid,qos,name,username,timelimit,numcpus,reasonlist -A <group_name>

How do I delete a job from the batch system?

You can use the following command to delete jobs from the queue. You can only delete jobs that you submitted.

$ scancel <job_id>

Why did my job die with the message '/bin/bash: bad interpreter: No such file or directory'?

This is typically caused by hidden characters in your job script that the command interpreter does not understand. If you created your script on a Windows machine and copied it to the cluster, you should run:

$ dos2unix <your_job_script>

Resource Use¶

How can I check what compute resources are available for me to use?

Use the following command to view your group’s total resource allocation, as well as how much of the allocation is in use at the given instant.

$ module load ufrc

$ slurmInfo <group_name>

Allocation information is returned for the both the investment QOS and burst QOS of the given group.

What does 'OOM', 'oom-kill event(s)', 'out of memory' error(s) in the job log means?

Short answer: request more memory when you resubmit the job. Long answer: each HiPerGator job/session is run with CPU core number, memory, and time limits set by the job resource request. Both the memory and time limits are going to result in the termination of the job if exceeded whereas the CPU core number limit can severely affect the performance of the job in some cases, but will not result in job termination. See Account and QOS limits under SLURM for a thorough explanation of resource limits. Read Out Of Memory for additional considerations.

How do I run applications that use multiple processors (i.e. parallel computing)?

Parallel computing refers to the use of multiple processors to run multiple computational tasks simultaneously. Communications between tasks use one of the following interfaces, depending on the task:

- OpenMp – used for communication between tasks running concurrently on the same node with access to shared memory

- MPI (OpenMPI) – used for communication between tasks which use distributed memory

- Hybrid – a combination of both OpenMp and MPI interfaces

Why do I get the error 'Invalid qos specification' when I submit a job?

If you get this error, it is most likely either because

- You submitted a job with a specified qos for which you are not a group member

- Your group does not have a computational allocation

- To check what groups you are a member of, log in to the cluster and use the following command:

To check the allocation of a particular group, log in to the cluster and use the following command:

$ groups <user_name>$ module load ufrc $ slurmInfo <group_name>

Why do I get the error 'slurmstepd: Exceeded job memory limit at some point'?

Sometimes, SLURM will log the error slurmstepd: Exceeded job memory limit at some point. This appears to be due to memory used for cache and page files triggering the warning. The process that enforces the job memory limits does not kill the job, but the warning is logged. The warning can be safely ignored. If your job truly does exceed the memory request, the error message will look like:

slurmstepd: Job 5019 exceeded memory limit (1292 > 1024), being killed

slurmstepd: Exceeded job memory limit

slurmstepd: *** JOB 5019 ON dev1 CANCELLED AT 2016-05-16T15:33:27 ***

GPU Use¶

Why has my GPU job been pending in the SLURM queue for a long time?

- All of your group’s allocated GPUs may be in use.

- You are requesting more gpus than are available on a single node in a single node job. Note that 8 B200 or 3 L4 GPUs are available per node in their respective partitions.

- Your job is requesting one or more B200 GPUs. The B200 GPUs on HiPerGator are in extremely high demand. Jobs requesting B200 GPUs must expect long job pending times. However, there are typically a large number of available L4 GPUs, so jobs requesting L4 GPUs are expected to start promptly. For your information, the L4 GPUs have 24GB of onboard memory compared to 180GB in B200 cards.

See GPU Access and Slurm and GPU use for more information on the hardware and selecting a GPU for a job. Use the ‘slurmInfo’ command to see your group’s current GPU usage.

What's the difference between GPU and HWGUI partitions?

HWGUI partitions are technically GPU partitions, but HWGUI is more dedicated to interface visualization for software whos GUI requires hardware acceleration, but it's not directed to high performance computing the way GPU partitions are.

How many CPUs/GPUs can I use?

Load the ufrc module to run the command slurmInfo, which shows resources available to your groups. You can use even more resources by choosing burst qos. Learn more at Account and QOS limits under SLURM. For more information about HiPerGator's SLURM scheduler, please visit the Scheduling documentation.

Storage¶

What types of storage are available and how should each type be used?

The 3 main types of storage are home, blue and orange. Home storage is a small quota that is used to store files important for setting up user shell environments and secure shell connections. Blue storage is our main high-performance parallel filesystem, where all job input/output should be performed. Orange storage is primarily intended for archival purposes only. Please see our Storage Documentation Page for more detailed information.

Why can't I run jobs in my home directory?

Home directories are intended for relatively small amounts of human-readable data such as text files, shell scripts, and source code. Neither the servers nor the file systems on which the home directories reside can sustain the load associated with a large cluster. Overall system response will be severely impacted if they are subjected to such a load. This is by design, and is the reason all job I/O must be directed to the network file systems such as blue or orange.

Can my storage quota be increased?

You can request a temporary quota increase. Submit a support request and indicate the following:

- How much additional space you need

- The file system on which you need it

- How long you will need it

Additional space is granted at the discretion of UFIT Research Computing on an “as available” basis for short periods of time. If you need more space on a long-term basis, please review our Storage Purchase Request Page or Contact Us to discuss an appropriate solution for your needs.

I can't see my (or my group's) /blue or /orange folders!

If you are listing /blue or /orange you won't see your group's directory tree. It's automatically connected (mounted) when you try to access it in any way e.g. by using an 'ls' or 'cd' command. E.g. if your group name is 'mygroup' you should list or cd into /blue/mygroup or /orange/mygroup. If you are using Jupyter Notebook or other GUI or web applications that make it difficult to browse to a specific path you can create a symlink (shortcut). Example: ln -s path_to_link_to name_of_link

How can I check my storage quota and current usage?

Log in to your Research Computing account using SSH and use the blue_quota or orange_quota command to see the quota information for both your user account and primary group. Please visit our File System Quotas page for more detailed documentation.

Why do I see \"No Space Left\" in job output or application error?

If you see a 'No Space Left' or a similar message (no quota remaining, etc) check the path(s) in the error message closely to look for 'home', 'orange', or 'blue', and check the respective quota for that filesystem.

A convenient interactive tool to see what's taking up the storage quota is the ncdu command in a bash terminal. You can run that command and delete or move data to a different storage to free up space.

If the data that's taking up most of the space is related to application environments and packages such as conda, pip, or singularity, you can modify your configuration file to update the default directories for custom installs. You can find more information about the .condarc setup here: Conda

Software¶

What software applications are available?

The full list of applications installed on the cluster is available at the Installed Software docs page.

May I submit an installation request for an application?

Yes, if the software you need is not listed on our Installed Software page, you may submit a support request to have it installed by UFIT Research Computing staff. Please observe the following guidelines:

- Please provide a link to the web site from which to download the software

- If there are multiple versions, be specific about the version you want

- Let us know if you require any options that are not a standard part of the application

- If the effort required to install the software is 4 hours or less, the request is placed in the work queue to be installed once an RC staff member is available to perform the work, usually within a few business days.

If initial evaluation of the request reveals that the effort is significantly greater than 4 hours, we will contact you to discuss how the work can be performed. It may be necessary to hire UFIT Research Computing staff as a consulting service to complete large and complex projects.

Note you may also install applications yourself in your home directory.

Please only ask us to install applications that you know will meet your needs and that you intend to use extensively. We do not have the resources to build applications for testing and evaluation purposes.

What software applications can run on the GPU partition?

GPU-accelerated computing is intended for use by highly parallel applications, where computation on a large amount of data can be broken into many small tasks performing the same operation, to be executed simultaneously. More simply put, large problems are divided into smaller ones, which can then be solved at the same time.

Since GPU is a special purpose architecture, it supports restrictive programming models; one such model is nVIDIA’s CUDA. On HiPerGator, only applications that were written in CUDA can run on the GPU partition. Currently, these applications are:

Why do I get the 'command not found' error message?

The Linux command interpreter (shell) maintains a list of directories in which to look for commands that are entered on the command line. This list is maintained in the PATH environment variable. If the full path to the command is not specified, the shell will search the list of directories in the PATH environment variable and if a match is not found, you will get the “command not found” message. A similar mechanism exists for dynamically linked libraries using the LD_LIBRARY_PATH environment variable.

To ease the burden of setting and resetting environment variables for different applications, we have installed a “modules” system. Each application has an associated module which, when loaded, will set or reset whatever environment variables are required to run that application – including the PATH and LD_LIBRARY_PATH variables.

The easiest way to avoid “command not found” messages is to ensure that you have loaded the module for your application. See the Modules Basic Usage page for more information.

Development¶

How do I develop and test software?

You should use the interactive test nodes for software development and testing. These nodes are kept consistent with the software environment on the computational servers so that you can be assured that if it works on a test machine, it will work via the batch system. Connect to the cluster and use the following command to start a developmental session:

$ module load ufrc

$ srundev

The srundev command can be modified to request additional time, processors, or memory, which have defaults of 10 minutes, 1 core, and 2GB memory, respectively. For example, to request a 60-minute session with 4 cores and 4GB memory, use:

$ module load ufrc

$ srundev --time=60 --cpus-per-task=4 --mem-per-cpu=4gb

Generally speaking, we use modules to mange our software environment including our PATH and LD_LIBRARY_PATH environment variables. To use any available software package that is not part of the default environment, including compilers, you must load the associated modules. For example, to use the Intel compilers and link against the fftw3 libraries you would first run:

$ module load intel

$ module load fftw

Which may be collapsed to the single command:

$ module load intel fftw

What compilers are available?

We have two compiler suites, the GNU Compiler Collection (GCC) and the Intel Compiler Suite (Composer XE). The default environment provides access to the GNU Compiler collection while the Composer XE may be accessed by loading the intel module (preferably, the latest version).

Projects With Restricted Data¶

What do I need to do to work on HiPerGator with patient health information (PHI) restricted by HIPAA?

HiPerGator meets the security and compliance requirements of the HITRUST standard. To set up a project that works with PHI, you must adhere by the policies and follow the procedures listed here.

What do I need to do to work on HiPerGator with student data restricted by FERPA?

HiPerGator meets the security and compliance requirements of the HITRUST standard. To set up a project that works with FERPA, you must adhere by the policies and follow the procedures listed here.

What do I need to do to work on HiPerGator with export controlled data restricted by ITAR/EAR?

HiPerGator has a secure enclave called ResVault, that has been certified to be compliant with NIST 800-171 and 800-53-moderate as required for handling controlled unclassified information (CUI) as specified in the DFARS. Follow the policies and procedures listed here.

Performance¶

What hardware is in HiPerGator?

HiPerGator compute and storage capabilities are always changing on a regular basis. Please see our About HiPerGator page for specific hardware details.

Why is HiPerGator running so slow?

There are many reasons why users may experience unusually low performance while using HPG. First, users should ensure that performance issues are not originated from their Internet service provider, home network, or personal devices.

Once the possible causes above are discarded, users should report the issue as soon as possible via the RC Support Ticketing System. When reporting the issue, please include detailed information such as:

- Time when the issue occurred

- JobID

- Nodes being used, i.e. username@hpg-node$. Note: Login nodes are not considered high performance nodes and intense jobs should not be executed from them.

- Paths, file names, etc.

- Operating system

- Method for accessing HPG: Jupyterhub, Open OnDemand, or Terminal interface used.

Are there profiling tools installed on HiPerGator that help identify performance bottlenecks?

The REMORA is the most generic profiling tool we have on the cluster. More specific tools depend on the application/stack or the language. E.g. cProfile for python code, Nsight Compute for CUDA apps, or VTune for C/C++ + MPI code.

Why is my job still pending?

According to SLURM documentation, when a job cannot be started a reason is immediately found and recorded in the job's "reason" field in the squeue output and the scheduler moves on to the next job to consider.

Related article: Account and QOS limits under SLURM

Common reasons why jobs are pending

| Reason | Explanation |

|---|---|

| Priority | Resources being reserved for higher priority job. This is particularly common on Burst QOS jobs. Refer to the Choosing QOS for a Job page for details. |

| Resources | Required resources are in use |

| Dependency | Job dependencies not yet satisfied |

| Reservation | Waiting for advanced reservation |

| AssociationJobLimit | User or account job limit reached |

| AssociationResourceLimit | User or account resource limit reached |

| AssociationTimeLimit | User or account time limit reached |

| QOSJobLimit | Quality Of Service (QOS) job limit reached |

| QOSResourceLimit | Quality Of Service (QOS) resource limit reached |

| QOSTimeLimit | Quality Of Service (QOS) time limit reached |

Data Transfers¶

Globus¶

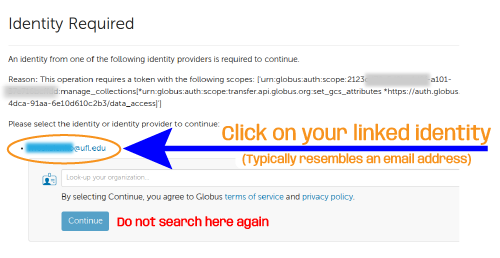

Why do I see an 'identity cannot be linked to itself' error in Globus when providing consent to access a collection?

This error comes up when you attempt to link to a Globus identity that has already been linked. In the Globus interface you should see an identity or identities listed above the "Look up your organization" text field and the 'Continue' button. Click on the hyperlink like identity listed above the organization lookup text box to confirm the use of an already linked identity. Do not select the organization and click on Continue to attemp to use an identity that's already listed above. It will only lead you in circles showing the error discussed here.